Mood by Touch

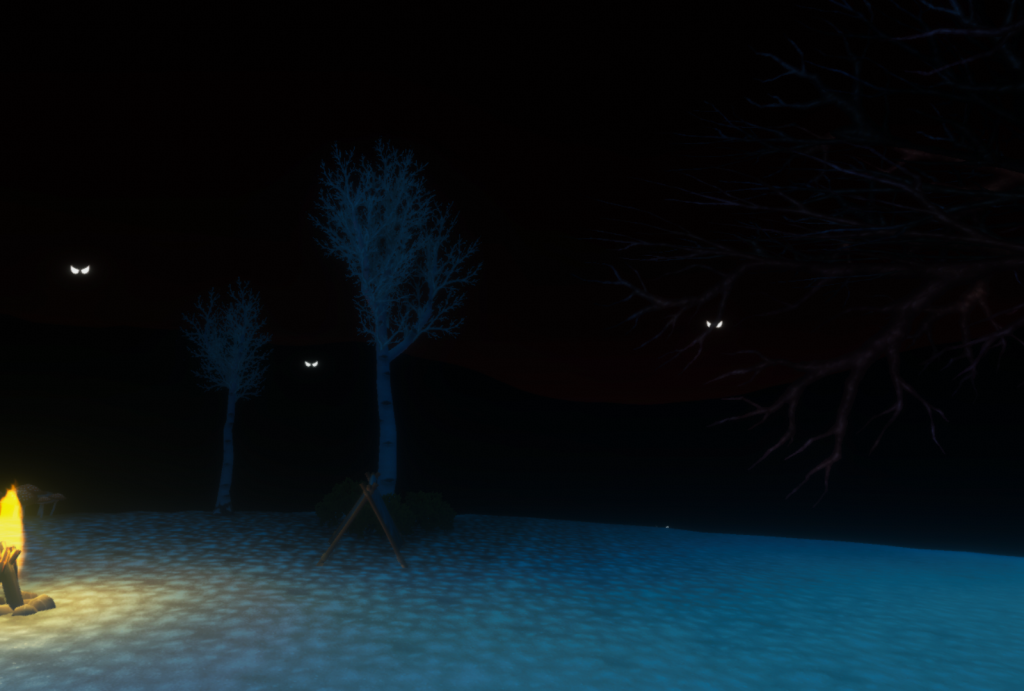

The mood by touch project began with my interest in VR, multi-modality and affective engineering. I wanted to try and create an experience that instills emotion with stimuli in three different modalities – visual, haptic and auditive. The project was conducted in Unity by creating one base-world in four different variations inspired by Plutchik’s Wheel of Emotions (1). To make sure that what I actually created was in line with what others would feel I went ahead and did some academic research on how you could instill anger, fear, sadness and hope with visual, auditive and haptic stimuli.

Research phase

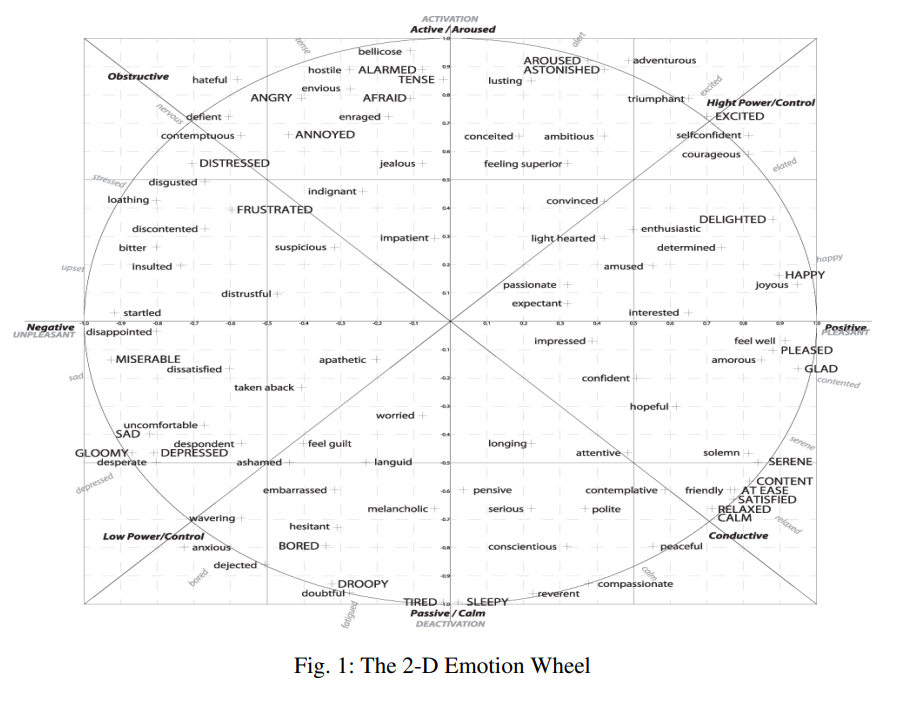

The 2D emotion wheel (4):

- Happiness: fast tempo, major harmonies, dance-like rhythm

- Fear: Classical music, film scores

- Fearful music can also coinduce surprise and anxiety

- Sadness: Slow tempo, low volume and minor key

Concept & Design

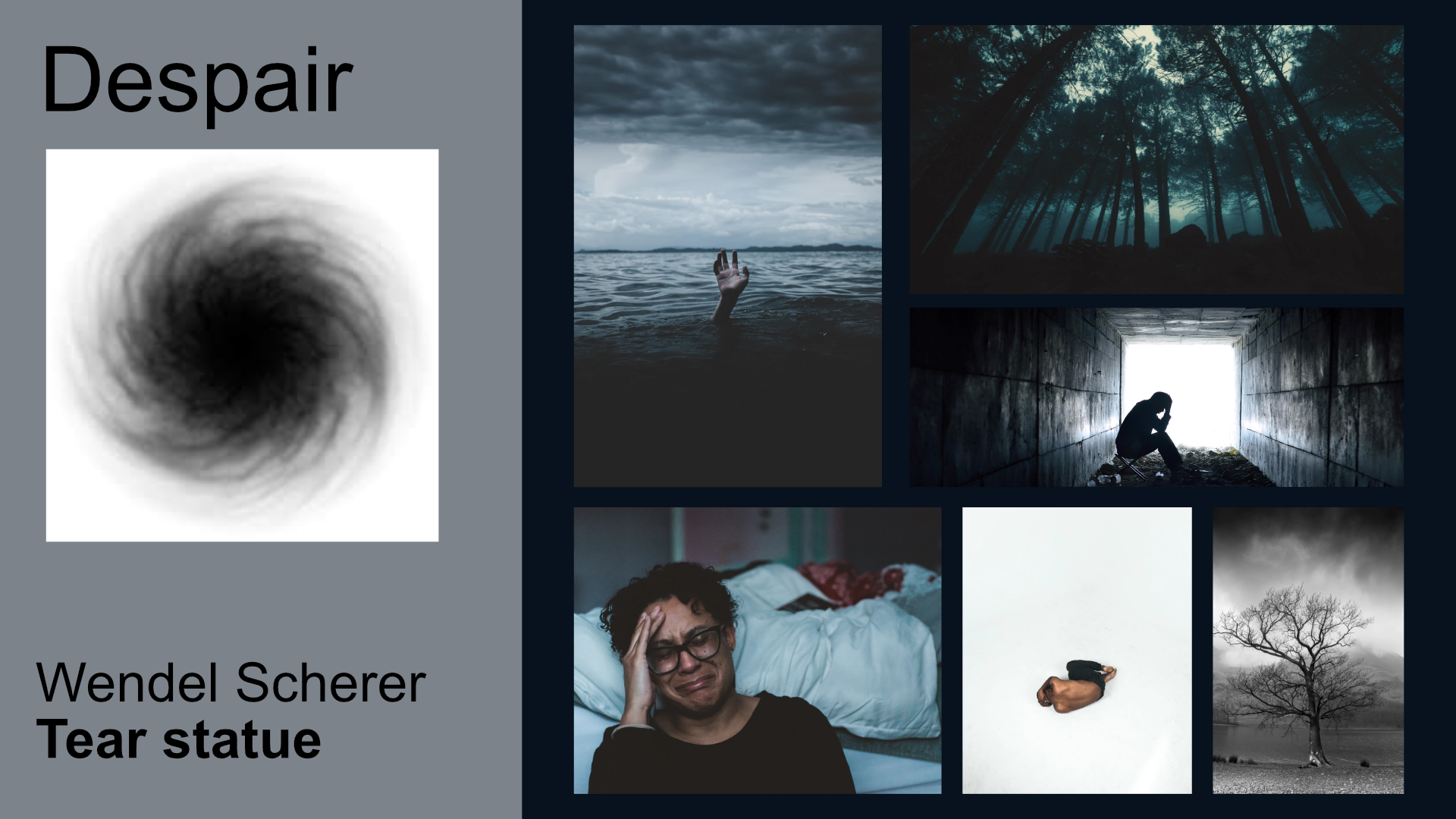

I decided for all music to be soundscape-esque ambient. But I soon realised that the music was taking me somewhere else than to despair and anxiety, so I dropped them and made four themes consisting of anger, fear, sadness and hope. I made some 3D sketches in cinema 4D to get color palettes done and to go with the music I had created. And then I worked some more on the music, to fit even better into the visual moods I had managed to convey.

Putting it all together

The result

Mood by Touch is playable in VR, but lacks the haptic aspect. Interested in trying it out for some reason? Hit me up.

References

1. https://en.wikipedia.org/wiki/Emotion_classification

2. https://news.rub.de/english/press-releases/2017-07-26-evolution-humans-identify-emotions-voices-all-air-breathing-vertebrates

3. https://www.researchgate.net/publication/273675140_Current_Emotion_Research_in_Music_Psychology

4. https://arxiv.org/pdf/1804.10938.pdf

5. https://digest.bps.org.uk/2019/08/28/heres-why-spiky-shapes-seem-angry-and-round-sounds-are-calming/

6. https://hi.stamen.com/the-shapes-of-emotions-72c3851143e2

7. https://journals.sagepub.com/doi/pdf/10.1177/1754073917749016

8. https://picular.co/